by Christine Milne, on Global Greens: http://www.globalgreens.org/news/fossil-fuel-free-world-should-be-front-and-centre-us-all-2015-christine-milne

The Lima climate talks did not go far enough to engender confidence for an

ambitious global pact in Paris, but it has pulled negotiations back from

the brink of collapse.

If this UN process is to change for the better, we must accept that

two realities are being lived by rich and poor nations, and Australia

must stop being miserly and obstructive.

Developed countries want to focus on mitigation. The developing

world, like our Pacific Island neighbours, are struggling with the

impacts of ever worsening extreme weather events, as well as sea level

rise, and want more focus on climate finance, adaptation, loss and

damage.

It is these poorest and least developed countries that did nothing

historically to cause the problem, which have the least capacity to cope

now. The issues of climate finance and loss and damage will not go

away. Failure to address them will jeopardise a good agreement in Paris.

It is proof that failure to act on climate costs more.

One disappointment for me was that the rest of the world allowed

Australia to get away with rorting the accounting rules yet again on

land use (LULUCF). It was bad enough that it happened with the Kyoto

Protocol first commitment period, but allowing it to carry over into the

second means Australia will have to do very little to achieve its 5%

emissions reduction target to 2020.

As to the overall negotiations, it is time to rethink the

Presidential, top down, style of negotiating, where in the face of

bogged down negotiations the COP President develops his or her own

agreement and tries to deliver it to the rest of the world.

This isn't

the way it was pre-Copenhagen and the method has proven itself a

failure. We need to go back to putting a representative of all blocs in a

room and letting them work it out.

There will be sadness and frustration that these talks have again

prioritised short term national economic self-interest over the global

commons or common interest, but what Lima has done is reinforce the

power of civil society to bring about change in spite of governments,

not because of them.

As with other COPs, the Peoples Climate March was fantastic with

15,000 people taking part. I enjoyed being there with people from all

over South America, but particularly people from Peru who were

protesting the loss of glaciers and highlighting water and food

security. It was terrific to have a Global Greens contingent in the

March.

Whether governments like it or not, the divestment movement is

gaining momentum. With a phase out of fossil fuels in the mix for Paris

it will be part of the global conversation in 2015, and this is where we

Greens in Australia need the Global Greens to be on the ball.

Australia

will try to have the phase out of fossil fuel use removed from the

text. It is critical Greens from all over the world influence their

governments not to give in to the fossil fuel nations like Australia and

Canada.

A fossil-fuel free world by 2050 should be front and centre for us

all in 2015. Without it we have no hope of constraining global warming

to two degrees.

Senator Christine Milne, Leader of the Australian Greens.

This site has been inspired by the work of Dr David Korten who argues that capitalism is at a critical juncture due to environmental, economic and social breakdown. This site argues for alternatives to capitalism in order to create a better world.

Wednesday, December 31, 2014

A Fossil-Fuel Free World Should be Front and Centre for Us All in 2015: Christine Milne

Monday, December 29, 2014

Why Are we Morally Obligated to Fight Climate Change?

|

| Logo of the Committee on Climate Change (Pic: Wikipedia) |

In 2015, humankind will face its greatest challenge ever - to achieve a binding global agreement to reduce carbon emissions and avert catastrophic run-away global warming.

The Conference of the Parties will hold its next climate conference - COP21 - in Paris in December. 2015 will be the most critical year the climate movement has yet faced. Many experts insist that this is our last chance to begin the transition to a zero-carbon future.

I’m pleased to feature Margaret Klein’s (Climate Psychologist) article, What Climate Change Asks of Us: Moral Obligation, Mobilization and Crisis Communication in BoomerWarrior. The article will be presented in three parts. Part 1 asks the question, why are we morally obligated to fight climate change? (Rolly Montpellier, Managing Editor, BoomerWarrior.Org).

Why are we morally obligated to fight climate change?

The future of humanity falls to us. Fighting Climate Change is the Ultimate Moral Obligation.Climate change is a crisis, and crises alter morality. Climate change is on track to cause the extinction of half the species on earth and, through a combination of droughts, famines, displaced people, and failed states and pandemics, the collapse of civilization within this century.

If this horrific destructive force is to be abated, it will be due to the efforts of people who are currently alive. The future of humanity falls to us. This is an unprecedented moral responsibility, and we are by and large failing to meet it.

Indeed, most of us act as though we are not morally obligated to fight climate change, and those who do recognize their obligation are largely confused about how to meet it.

Crises alter morality; they alter what is demanded of us if we want to be considered good, honorable people. For example - having a picnic in the park is morally neutral. But if, during your picnic, you witness a group of children drowning and you continue eating and chatting, passively ignoring the crisis, you have become monstrous.

A stark, historical example of crisis morality is the Holocaust - history judges those who remained passive during that fateful time. Simply being a private citizen (a “Good German”) is not considered honorable or morally acceptable in retrospect. Passivity, in a time of crisis, is complicity. It is a moral failure. Crises demand that we actively engage; that we rise to the challenge; that we do our best.

What is the nature of our moral obligation to fight climate change?

Our first moral obligation is to assess how we can most effectively help. While climate change is more frequently being recognized as a moral issue - the question, “How can a person most effectively engage in fighting climate change?” is rarely seriously considered or discussed.

In times of crises, we can easily become overwhelmed with fear and act impetuously to discharge those feelings to “do something.” We may default to popular or well-known activism tactics, such as writing letters to our congress people or protesting fossil-fuel infrastructure projects without rigorously assessing if this is the best use of our time and talents.

The question of “how can I best help” is particularly difficult for people to contemplate because climate change requires collective emergency action, and we live in a very individualistic culture. It can be difficult for an individual to imagine themselves as helping to create a social and political movement; helping the group make a shift in perspective and action.

Instead of viewing themselves as possibly influencing the group, many people focus entirely on themselves, attempting to reduce their personal carbon footprint. This offers a sense of control and moral achievement, but it is illusory; it does not contribute (at least not with maximal efficacy) to creating the collective response necessary.

We need to mobilize, together

Climate change is a crisis, and it requires a crisis response. A wide variety of scientists, scholars, and activists agree: the only response that can save civilization is an all-out, whole-society

mobilization[i].

World War II provides an example of how the United States accomplished this in the past. We converted our industry from consumer-based to mission-based in a matter of months; oriented national and university research toward the mission, and mobilized the American citizenry toward the war effort in a wide variety of ways.

Major demographic shifts were made to facilitate the mission, which was regarded as America’s sine qua non; for example, 10% of Americans moved to work in a “war job,” women worked in factories for the first time, and racial integration took steps forward. Likewise, we must give the climate effort everything we have, for if we lose, we may lose everything.

Where we are

While the need for a whole society and economy mobilization to fight climate change is broadly understood by experts, we are not close to achieving it as a society. Climate change ranks at the bottom of issues that citizens are concerned about[ii].

The climate crisis is rarely discussed in social or professional situations. This climate silence is mirrored in the media and political realm: for example, climate change wasn’t even mentioned in the 2012 presidential debates.

When climate change is discussed, it is either discussed as a “controversy” or a “problem” rather than the existential emergency that it actually is. Our civilization, planet, and each of us individually are in an acute crisis, but we are so mired in individual and collective denial and distortion that we fail to see it clearly.

The house is on fire, but we are still asleep, and our opportunity for being able to save ourselves is quickly going up in smoke.

Watch for Part 2 of What Climate Change Asks of Us: Moral Obligation, Mobilization and Crisis Communication.

References:

[i] Selected advocates of a WWII scale climate mobilization: Lester Brown, 2004; David Spratt and Phillip Sutton, 2008; James Hansen, 2008; Mark Deluchi and Mark Jacobsen, 2008; Paul Gilding, 2011; Joeseph Romm, 2012; Michael Hoexter, 2013; Mark Bittman, 2014.

[ii] Rifkin, 2014. “Climate Change Not a Top Worry in US.” Gallup Politics.

___

Margaret Klein is a therapist turned advocate. She is the co-founder of The Climate Mobilization and the creator of The Climate Psychologist. She earned her doctorate in clinical psychology from Adelphi University and also holds a BA in Social Anthropology from Harvard.

Margaret applies her psychological and anthropological knowledge to solving climate change. You can follow her and Climate Mobilization on Twitter: @ClimatePsych / @MobilizeClimate and also on Facebook.

Labels:

Climate Change,

Community Resilience,

Social Change

Sunday, December 28, 2014

Fukushima and the Institutional Invisibility of Nuclear Disaster

by John Downer, The Ecologist: http://www.theecologist.org/News/news_analysis/2684383/fukushima_and_the_institutional_invisibility_of_nuclear_disaster.html

|

| Fukushima Daichi, 24/3/2011 (deedavee easyflow, Flickr) |

The nuclear industry and its supporters have

contrived a variety of narratives to justify and explain away nuclear

catastrophes, writes John Downer.

None of them actually hold water, yet

they serve their purpose - to command political and media heights, and

reassure public sentiment on 'safety'.

But if it's so safe, why the low

limits on nuclear liabilities?

In a truly competitive marketplace, unfettered by subsidies, no-one would have built a nuclear reactor in the past, no-one would build one today, and anyone who owns a reactor would exit the nuclear business as quickly as possible.

In doing so, he exemplified the many early accounts of Fukushima that emphasised the improbable nature of the earthquake and tsunami that precipitated it.

A range of professional bodies made analogous claims around this time, with journalists following their lead. This lamentation, by a consultant writing in the New American, is illustrative of the general tone:

" ... the Fukushima 'disaster' will become the rallying cry against nuclear power. Few will remember that the plant stayed generally intact despite being hit by an earthquake with more than six times the energy the plant was designed to withstand, plus a tsunami estimated at 49 feet that swept away backup generators 33 feet above sea level."

The explicit or implicit argument in all such accounts is that the Fukushima's proximate causes are so rare as to be almost irrelevant to nuclear plants in the future.

Nuclear power is safe, they suggest, except against the specific kind of natural disaster that struck Japan, which is both a specifically Japanese problem, and one that is unlikely to re-occur, anywhere, in any realistic timeframe

An appealing but tenuous logic

The logic of this is tenuous on various levels. The 'improbability' of the natural disaster is disputable, for one, as there were good reasons to believe that neither the earthquake nor the tsunami should have been surprising. The area was well-known to be seismically active after all, and the quake, when it came, was only the fourth largest of the last century.

The Japanese nuclear industry had even confronted its seismic under-preparedness four years earlier, on 16 July 2007, when an earthquake of unanticipated magnitude damaged the Kashiwazaki-Kariwa nuclear plant.

This had led several analysts to highlight Fukushima's vulnerability to earthquakes, but officials had said much the same then as they now said in relation to Fukushima. The tsunami was not without precedent either.

Geologists had long known that a similar event had occurred in the same area in July 869. This was a long time ago, certainly, but the data indicated a thousand-year return cycle.

Several reports, meanwhile, have suggested that the earthquake alone might have precipitated the meltdown, even without the tsunami - a view supported by a range of evidence, from worker testimony, to radiation alarms that sounded before the tsunami.

Haruki Madarame, the head of Japan's Nuclear Safety Commission, has criticised Fukushima's operator, TEPCO, for denying that it could have anticipated the flood.

The claim that Japan is 'uniquely vulnerable' to such hazards is similarly disputable. In July 2011, for instance, the Wall Street Journal reported on private NRC emails showing that the industry and its regulators had evidence that many US reactors were at risk from earthquakes that had not been anticipated in their design.

It noted that the regulator had taken very little or no action to accommodate this new understanding. As if to illustrate their concern, on 23 August 2011, less than six months after Fukushima, North Anna nuclear plant in Mineral, Virginia, was rocked by an earthquake that exceeded its design-basis predictions.

Every accident is 'unique' - just like the next one

There is, moreover, a larger and more fundamental reason to doubt the 'unique events or vulnerabilities' narrative, which lies in recognising its implicit assertion that nuclear plants are safe against everything except the events that struck Japan.

It is important to understand that those who assert that nuclear power is safe because the 2011 earthquake and tsunami will not re-occur are, essentially, saying that although the industry failed to anticipate those events, it has anticipated all the others.

Yet even a moment's reflection reveals that this is highly unlikely. It supposes that experts can be sure they have comprehensively predicted all the challenges that nuclear plants will face in its lifetime (or, in engineering parlance: that the 'design basis' of every nuclear plant is correct) - even though a significant number of technological disasters, including Fukushima, have resulted, at least in part, from conditions that engineers failed to even consider.

As Sagan points out: "things that have never happened before, happen all the time". The terrorist attacks of 9/11 are perhaps the most iconic illustration of this dilemma but there are many others.

Perrow (2007) painstakingly explores a landscape of potential disaster scenarios that authorities do not formally recognise, but it is highly unlikely that he has considered them all.

More are hypothesised all the time. For instance, researchers have recently speculated about the effects of massive solar storms, which, in pre-nuclear times, have caused electrical systems over North America and Europe to fail for weeks at a time.

Human failings that are unrepresentative and / or correctable

A second rationale that accounts of Fukushima invoke to establish that accidents will not re-occur focuses on the people who operated or regulated the plant, and the institutional culture in which they worked. Observers who opt to view the accident through this lens invariably construe it as the result of human failings - either error, malfeasance or both.

The majority of such narratives relate the failings they identify directly to Fukushima's specific regulatory or operational context, thereby portraying it as a 'Japanese' rather than a 'nuclear' accident.

Many, for instance, stress distinctions between US and Japanese regulators; often pointing out that the Japanese nuclear regulator (NISA) was subordinate to the Ministry of Trade and Industry, and arguing that this created a conflict of interest between NISA's responsibilities for safety and the Ministry's responsibility to promote nuclear energy.

They point, for instance, to the fact that NISA had recently been criticised by the International Atomic Energy Agency (IAEA) for a lack of independence, in a report occasioned by earthquake damage at another plant. Or to evidence that NISA declined to implement new IAEA standards out of fear that they would undermine public trust in the nuclear industry.

Other accounts point to TEPCO, the operator of the plant, and find it to be distinctively "negligent". A common assertion in vein, for instance, is that it concealed a series of regulatory breaches over the years, including data about cracks in critical circulation pipes that were implicated in the catastrophe.

There are two subtexts to these accounts. Firstly, that such an accident will not happen here (wherever 'here' may be) because 'our' regulators and operators 'follow the rules'. And secondly, that these failings can be amended so that similar accidents will not re-occur, even in Japan.

Where accounts of the human failings around Fukushima do portray those failings as being characteristic of the industry beyond Japan, the majority still construe those failings as eradicable.

In March 2012, for instance, the Carnegie Endowment for International Peace issued a report that highlighted a series of organisational fallings associated with Fukushima, not all of which they considered to be meaningfully Japanese.

Nevertheless, the report - entitled 'Why Fukushima was preventable' - argued that such failings could be resolved. "In the final analysis", it concluded, "the Fukushima accident does not reveal a previously unknown fatal flaw associated with nuclear power."

The same message echoes in the many post-Fukushima actions and pronouncements of nuclear authorities around the world promising managerial reviews and reforms, such as the IAEA's hastily announced 'five-point plan' to strengthen reactor oversight.

Myths of exceptionality

As with the previous narratives about exogenous hazards, however, the logic of these 'human failure' arguments is also tenuous. Despite the editorial consternation that revelations about Japanese malfeasance and mistakes have inspired, for instance, there are good reasons to believe that neither were exceptional.

It would be difficult to deny that Japan had a first-class reputation for managing complex engineering infrastructures, for instance. As the title of one op-ed in the Washington Post puts it: "If the competent and technologically brilliant Japanese can't build a completely safe reactor, who can?"

Reports of Japanese management failings must be considered in relation to the fact that reports of regulatory shortcomings, operator error, and corporate malfeasance abound in every state with nuclear power and a free press.

There also exists a long tradition of accident investigations finding variations in national safety practices that are later rejected on further scrutiny.

When Western experts blamed Chernobyl on the practices of Soviet nuclear industry, for example, they unconsciously echoed Soviet narratives highlighting the inferiority of Western safety cultures to argue that an accident like Three Mile Island could never happen in the USSR.

Arguments suggesting that 'human' problems are potentially solvable are similarly difficult to sustain, for there are compelling reasons to believe that operational errors are an inherent property of all complex socio-technical systems.

Close accounts of even routine technological work, for instance, routinely find it to be necessarily and unavoidably 'messier' in practice than it appears on paper.

Thus both human error and non-compliance are ambiguous concepts. As Wynne (1988: 154) observes: "... the illegitimate extension of technological rules and practices into the unsafe or irresponsible is never clearly definable, though there is ex-post pressure to do so." The culturally satisfying nature of 'malfeasance explanations' should, by itself, be cause for circumspection.

These studies undermine the notion of 'perfect rule compliance' by showing that even the most expansive stipulations sometimes require interpretation and do not relieve workers of having to make decisions in uncertain conditions.

In this context we should further recognise that accounts that show Fukushima, specifically, was preventable are not evidence that nuclear accidents, in general, are preventable.

To argue from analogy: it is true to say that any specific crime might have been avoided (otherwise it wouldn't be a crime), but we would never deduce from this that crime, the phenomenon, is eradicable. Human failure will always be present in the nuclear sphere at some level, as it is in all complex socio-technical systems.

And, relative to the reliability demanded of nuclear plants, it is safe to assume that this level will always be too high or, at least, that our certainty regarding it will be too low. While human failures and malfeasance are undoubtedly worth exploring, understanding and combating, therefore, we should avoid the conclusion that they can be 'solved'.

Plant design is unrepresentative and/or correctable

Parallel to narratives about Fukushima's circumstances and operation, outlined above, are narratives that emphasise the plant itself.

These limit the relevance of accident to the wider nuclear industry by arguing that the design of its reactor (a GE Mark-1) was unrepresentative of most other reactors, while simultaneously promising that any reactors that were similar enough to be dangerous could be rendered safe by 'correcting' their design.

Accounts in this vein frequently highlight the plant's age, pointing out that reactor designs have changed over time, presumably becoming safer. A UK civil servant exemplified this narrative, and the strategic decision to foreground it, in an internal email (later printed in the Guardian [2011]), in which he asserted that

"We [The Department of Business, Innovation and Skills] need to … show that events in Japan, whilst looking dramatic, are all part of the safety processes of this 1960's reactor."

Stressing the age of the reactor in this way became a mainstay of Fukushima discourse in the disaster's immediate aftermath. Guardian columnist George Monbiot (2011b), for instance, described Fukushima as "a crappy old plant with inadequate safety features".

He concluded that its failure should not speak to the integrity of later designs, like that of the neighboring plant, Fukushima 'Daini', which did not fail in the tsunami. "Using a plant built 40 years ago to argue against 21st-century power stations", he wrote, "is like using the Hindenburg disaster to contend that modern air travel is unsafe."

Other accounts highlighted the reactor's design but focused on more generalisable failings, such as the "insufficient defense-in-depth provisions for tsunami hazards" (IAEA 2011a: 13), which could not be construed as indigenous only to the Mark-1 reactors or their generation.

The implication - we can and will fix all these problems

These failings could be corrected, however, or such was the implication. The American Nuclear Society set the tone, soon after the accident, when it reassured the world that: "the nuclear power industry will learn from this event, and redesign our facilities as needed to make them safer in the future."

Almost every official body with responsibility for nuclear power followed in their wake. The IAEA, for instance, orchestrated a series of rolling investigations, which eventually culminated in the announcement of its 'Action Plan on Nuclear Safety' and a succession of subsequent meetings where representatives of different technical groups could pool their analyses and make technical recommendations.

The groups invariably conclude that "many lessons remain to be learned" and recommend further study and future meetings. Again, however, there is ample cause for scepticism.

Firstly, there are many reasons to doubt that Fukushima's specific design or generation made it exceptionally vulnerable. As noted above, for instance, many of the specific design failings identified after the disaster - such as the inadequate water protection around reserve power supplies - were broadly applicable across reactor designs.

And even if the reactor design or its generation were exceptional in some ways, that exceptionalism is decidedly limited. There are currently 32 Mark-1 reactors in operation around the world, and many others of a similar age and generation, especially in the US, where every reactor currently in operation was commissioned before the Three Mile Island accident in 1979.

Secondly, there is little reason to believe that most existing plants could be retrofitted to meet all Fukushima's lessons. Significantly raising the seismic resilience of a nuclear plant, for instance, implies such extensive design changes that it might be more practical to decommission the entire structure and rebuild from scratch.

This perhaps explains why progress has been halting on the technical recommendations. It might be true that different, or more modern reactors are safer, therefore, but these are not the reactors we have.

In March 2012, the NRC did announce some new standards pertaining to power outages and fuel pools - issuing three 'immediately effective' orders requiring operators to implement some of the more urgent recommendations. The required modifications were relatively modest, however, and 'immediately' in this instance meant 'by December 31st 2016'.

Meanwhile, the approvals for four new reactors the NRC granted around this time contained no binding commitment to implement the wider lessons it derived from Fukushima. In each case, the increasingly marginalised NRC chairman, Gregory Jaczko, cast a lone dissenting vote. He was also the only committee member to object to the 2016 timeline.

Complex systems' ability to keep on surprising

Finally, and most fundamentally, there are many a priori reasons to doubt that any reactor design could be as safe as risk analyses suggest. Observers of complex systems have outlined strong arguments for why critical technologies are inevitably prone to some degree of failure, whatever their design.

The most prominent such argument is Perrow's Normal Accident Theory (NAT), with its simple but profound probabilistic insight that accidents caused by very improbable confluences of events (that no risk calculation could ever anticipate) are 'normal' in systems where there are many opportunities for them to occur.

From this perspective, the 'we-found-the-flaw-and-fixed-it' argument is implausible because it offers no way of knowing how many 'fateful coincidences' the future might hold.

'Lesson 1' of the IAEA's preliminary report on Fukushima is that the " ... design of nuclear plants should include sufficient protection against infrequent and complex combinations of external events."

NAT explains why an irreducible number of these 'complex combinations' must be forever beyond the reach of formal analysis and managerial control.

A different way of demonstrating much the same conclusion is to point to the fundamental epistemological ambiguity of technological knowledge, and to how the significance of this ambiguity is magnified in complex, safety-critical systems due to the very high levels of certainty these systems require.

Judgements become more significant in this context because they have to be absolutely correct. There is no room for error bars in such calculations. It makes little sense to say that we are 99% certain a reactor will not explode, but only 50% sure that this number is correct.

Perfect safety can never be guaranteed

Viewed from this perspective, it becomes apparent that complex systems are likely to be prone to failures arising from erroneous beliefs that are impossible to predict in advance, which I have elsewhere called 'Epistemic Accidents'.

This is essentially to say that the 'we-found-the-flaw-and-fixed-it' argument cannot guarantee perfect safety because it offers no way of knowing how many new 'lessons' the future might hold.

Just as it is impossible for engineers and regulators to know for certain that they have anticipated every external event a nuclear plant might face, so it is impossible for them know that their understanding of the system itself is completely accurate.

Increased safety margins, redundancy, and defense-in-depth undoubtedly might improve reactor safety, but no amount of engineering wizardry can offer perfect safety, or even safety that is 'knowably' of the level that nuclear plants require. As Gusterson (2011), puts it: " ... the perfectly safe reactor is always just around the corner."

Nuclear authorities sometimes concede this. After the IAEA ’s 2012 recommendations to pool insights from the disaster, for instance, the meeting’s chairman, Richard Meserve, summarised: "In the nuclear business you can never say, 'the task is done'."

Instead they promise improvement. "The Three Mile Island and Chernobyl accidents brought about an overall strengthening of the safety system", Meserve continued. "It is already apparent that the Fukushima accident will have a similar effect."

The real question, however, is when will the safety be strong enough? As it wasn’t after Three Mile Island or Chernobyl, why should Fukushima be any different?

The reliability myth

This is all to say, in essence, that it is misleading to assert that an accident of Fukushima's scale will not re-occur. For there are credible reasons to believe that the reliability required of reactors is not calculable, and there are credible reasons to believe that the actual reliability of reactors is much lower than is officially calculated.

These limitations are clearly evinced by the actual historical failure rate of nuclear reactors. Even the most rudimentary calculations show that civil nuclear accidents have occurred far more frequently than official reliability assessments have predicted.

The exact numbers vary, depending on how one classifies 'an accident' (whether Fukushima counts as one meltdown or three, for example), but Ramana (2011) puts the historical rate of serious meltdowns at 1 in every 3,000 reactor years, while Taebi et al. (2012: 203fn) put it at somewhere between 1 in every 1,300 to 3,600 reactor years. Either way, the implied reliability is orders of magnitude lower than assessments claim.

In a recent declaration to a UK regulator, for instance, Areva, a prominent French nuclear manufacturer, invoked probabilistic calculations to assert that the likelihood of a "core damage incident" in its new 'EPR' reactor were of the order of one incident, per reactor, every 1.6 million years (Ramana 2011).

Two: the accident was tolerable

The second basic narrative through which accounts of Fukushima have kept the accident from undermining the wider nuclear industry rests on the claim that its effects were tolerable - that even though the costs of nuclear accidents might look high, when amortised over time they are acceptable relative to the alternatives.

The 'accidents are tolerable' argument is invariably framed in relation to the health effects of nuclear accidents. "As far as we know, not one person has died from radiation", Sir David King told a press conference in relation to Fukushima, neatly expressing a sentiment that would be echoed in editorials around the world in the aftermath of the accident.

"Atomic energy has just been subjected to one of the harshest of possible tests, and the impact on people and the planet has been small", concluded Monbiot in one characteristic column.

"History suggests that nuclear power rarely kills and causes little illness", the Washington Post reassured its readers (Brown 2011). See also eg McCulloch (2011); Harvey (2011). "Fukushima's Refugees Are Victims Of Irrational Fear, Not Radiation", declared the title of an article in Forbes (Conca 2012).

In its more sophisticated forms, this argument draws on comparisons with other energy alternatives. A 2004 study by the American Lung Association argues that coal-fired power plants shorten the lives of 24,000 people every year.

Chernobyl, widely considered to be the most poisonous nuclear disaster to date, is routinely thought to be responsible for around 4,000 past or future deaths.

Even if the effects of Fukushima are comparable (which the majority of experts insist they are not), then by these statistics the human costs of nuclear energy seem almost negligible, even when accounting for its periodic failures.

Such numbers are highly contestable, however. Partly because there are many more coal than nuclear plants (a fairer comparison might consider deaths per kilowatt-hour). But mostly because calculations of the health effects of nuclear accidents are fundamentally ambiguous.

Chronic radiological harm can manifest in a wide range of maladies, none of which are clearly distinguishable as being radiologically induced - they have to be distinguished statistically - and all of which have a long latency , sometimes of decades or even generations.

How many died? It all depends ...

So it is that mortality estimates about nuclear accidents inevitably depend on an array of complex assumptions and judgments that allow for radically divergent - but equally 'scientific' - interpretations of the same data. Some claims are more compelling than others, of course, but 'truth' in this realm does not 'shine by its own lights' as we invariably suppose it ought.

Take, for example, the various studies of Chernobyl's mortality, from which estimates of Fukushima's are derived. The models underlying these studies are themselves derived from data from Hiroshima and Nagasaki survivors, the accuracy and relevance of which have been widely criticised, and they require the modeller to make a range of choices with no obviously correct answer.

Modellers must select between competing theories of how radiation affects the human body, for instance; between widely varying judgments about the amount of radioactive material the accident released; and much more. Such choices are closely interlinked and mutually dependent.

Estimates of the composition and quantities of the isotopes released in the accident, for example, will affect models of their distribution, which, in conjunction with theories of how radiation affects the human body, will affect conclusions about the specific populations at risk.

This, in turn, will affect whether a broad spike in mortality should be interpreted as evidence of radiological harm or as evidence that many seemingly radiation - related deaths are actually symptomatic of something else. And so on ad infinitum: a dynamic tapestry of theory and justification, where subtle judgements reverberate throughout the system.

The net result is that quiet judgements concerning the underlying assumptions of an assessment - usually made in the very earliest stages of a study and all but invisible to most observers - have dramatic affects on its findings. The effects of this are visible in the widely divergent assertions made about Chernobyl's death toll.

The 'orthodox' mortality figure cited above - no more than 4,000 deaths - comes from the 2005 IAEA-led 'Chernobyl Forum ' report. Or rather, from the heavily bowdlerised press release from the IAEA that accompanied its executive summary. The actual health section of the report alludes to much higher numbers.

Yet the '4,000 deaths' number is endorsed and cited by most international nuclear authorities, although it stands in stark contrast to the findings of similar investigations.

Two reports published the following year, for example, offer much higher figures: one estimating 30,000 to 60,000 cancer deaths (Fairlie & Sumner 2006); the other 200,000 or more (Greenpeace 2006: 10).

In 2009, meanwhile, the New York Academy of Sciences published an extremely substantive Russian report by Yablokov that raised the toll even further, concluding that in the years up to 2004, Chernobyl caused around 985,000 premature cancer deaths worldwide.

Between these two figures - 4,000 and 985,000 - lie a host of other expert estimations of Chernobyl's mortality, many of them seemingly rigorous and authoritative. The Greenpeace report tabulates some of the varying estimates and correlates them to differing methodologies.

Science? Or propaganda?

Different sides in this contest of numbers routinely assume their rivals are actively attempting to mislead - a wide range of critics argue that most official accounts are authored by industry apologists who 'launder' nuclear catastrophes by dicing evidence of their human fallout into an anodyne melée of claims and counter claims.

When John Gofman, a former University of California Berkeley Professor of Medical Physics, wrote that the Department of Energy was "conducting a Josef Goebels propaganda war" by advocating a conservative model of radiation damage, for instance, his charge more remarkable for its candor than its substance.

And there is certainly some evidence for this. There can be little doubt that in the past the US government has intentionally clouded the science of radiation hazards to assuage public concerns. The 1995 US Advisory Committee on Human Radiation Experiments, for instance, concluded that Cold War radiation research was heavily sanitised for political ends.

A former AEC (NRC) commissioner testified in the early 1990s that: "One result of the regulators' professional identification with the owners and operators of the plants in the battles over nuclear energy was a tendency to try to control information to disadvantage the anti-nuclear side."

It is perhaps more useful, however, to say they are each discriminating about the realities to which they adhere.

In this realm there are no entirely objective facts, and with so many judgements it is easy to imagine how even small, almost invisible biases, might shape the findings of seemingly objective hazard calculations.

Indeed, many of the judgements that separate divergent nuclear hazard calculations are inherently political, with the result that there can be no such thing as an entirely neutral account of nuclear harm.

Researchers must decide whether a 'stillbirth' counts as a 'fatality', for instance. They must decide whether an assessment should emphasise deaths exclusively, or if it should encompass all the injuries, illnesses, deformities and dis abilities that have been linked to radiation. They must decide whether a life 'shortened' constitutes a life 'lost'.

There are no correct answers to such questions. More data will not resolve them. Researchers simply have to make choices. The net effect is that the hazards of any nuclear disaster can only be glimpsed obliquely through a distorted lens.

So much ambiguity and judgement is buried in even the most rigorous calculations of Fukushima's health impacts that no study can be definitive. All that remains are impressions and, for the critical observer, a vertiginous sense of possibility.

Estimating the costs - how many $100s of billions?

The only thing to be said for sure is that declarative assurances of Fukushima's low death toll are misleading in their surety. Given the intense fact-figure crossfire around radiological mortality, it is unhelpful to view Fukushima purely through the lens of health.

In fact, the emphasis on mortality might itself be considered a way of minimising Fukushima, considering that there are other - far less ambiguous - lenses through which to view the disaster's consequences.

Fukushima's health effects are contested enough that they can be interpreted in ways that make the accident look tolerable, but it is much more challenging to make a case that it was tolerable in other terms.

Take, for example, the disaster's economic impact. The intense focus on the health and safety effects of Fukushima has all but eclipsed its financial consequences, yet the latter are arguably more significant and are certainly less ambiguous.

Nuclear accidents incur a vast spectrum of costs. There are direct costs relating to the need to seal off the reactor; study, monitor and mitigate its environmental fallout; resettle, compensate and treat the people in danger; and so forth.

Over a quarter of a century after Chernobyl, the accident still haunts Western Europe, where government scientists in several countries continue to monitor certain meats, and keep some from entering the food chain.

Then there is an array of indirect costs that arise from externalities, such as the loss of assets like farmland and industrial facilities; the loss of energy from the plant and those around it; the impact of the accident on tourism; and so forth. The exact economic impact of a nuclear accident is almost as difficult to estimate as its mortality, and projections differ for the same fundamental reasons.

The evacuation zone around Fukushima - an area of around 966 sq km, much of which will be uninhabitable for generations - covers 3% of Japan, a densely populated and mountainous country where only 20% of the land is habitable in the first place.

They do not differ to the same degree, however, and in contrast to Fukushima's mortality there is little contention that its financial costs will be enormous.

By November of 2013, the Japanese government had already allocated over 8 trillion yen (roughly $80 billion or £47 billion) to Fukushima's clean-up alone - a figure that excluded the cost of decommissioning the six reactors, a process expected to take decades and cost tens of billions of dollars.

Independent experts have estimated the clean-up cost to be in the region of $500 billion (Gunderson & Caldicott 2012). These estimates, moreover, exclude most of the indirect costs outlined above, such as the disaster's costs to food and agriculture industries, which the Japanese Ministry of Agriculture, Forestries and Fisheries (MAFF) has estimated to be 2,384.1 billion yen (roughly $24 billion).

Of these competing estimates, the higher numbers seem more plausible. The notoriously conservative report of the Chernobyl Forum estimated that the cost of that accident had already mounted to "hundreds of billions of dollars" after just 20 years, and it seems unlikely that Fukushima's three meltdowns could cost less.

Even if we assume that Chernobyl was more hazardous than Fukushima (a common conviction that is incrementally becoming more tenuous), then it remains true that the same report projected the 30-year costs cost to Belarus alone to be US$235 billion, and that Belarus's lost opportunities, compensation payments and clean-up expenditures are unlikely to rival Japan's.

Considering, for instance, Japan's much higher cost of living, its indisputable loss of six reactors and decision to at least shutter the remainder of its nuclear plants, and many other factors. The Chernobyl reactor did not even belong to Belarus - it is in what is now the Ukraine.

The nuclear disaster liability swindle

To put these figures into perspective, consider that nuclear utilities in the US are required to create an industry - wide insurance pool of only about $12 billion for accident relief, and are protected against further losses by the Price-Anderson Act, by which the US Congress has socialised the costs of any nuclear disaster.

The nuclear industry needs such extraordinary government protection in the US, as it does in all countries, because - for all the authoritative, blue-ribbon risk assessments demonstrating its safety - the reactor business, almost uniquely, is unable to secure private insurance.

The industry's unique dependence on limited liabilities reflects the fact that no economic justification for atomic power could concede the evitability of major accidents like Fukushima and remain viable or competitive.

As Mark Cooper, the author of a 2012 report on the economics of nuclear disaster has put it:

"If the owners and operators of nuclear reactors had to face the full liability of a Fukushima-style nuclear accident or go head-to-head with alternatives in a truly competitive marketplace, unfettered by subsidies, no one would have built a nuclear reactor in the past, no one would build one today, and anyone who owns a reactor would exit the nuclear business as quickly as possible."

John Downer works at the Global Insecurities Centre, School of Sociology, Politics and International Studies, Bristol University.

This article is an extract from 'In the shadow of Tomioka - on the institutional invisibility of nuclear disaster', Published by the Centre for Analysis of Risk and Regulation at the London School of Economics and Political Science.

This version has been edited to include important footnote material in the main text, and exclude most references. Scholars, scientists, researchers, etc please refer to the original publication.

Saturday, December 27, 2014

Can You Guess Which Country is Winning at Conservation? Believe It or Not, Environmental Protection is Written Into the Constitution

|

| Thimpu, Bhutan (Photo: Richard L'Anson/Getty Images) |

Esha Chhabra is a journalist who covers social enterprise, technology for social impact, and development. full bio

The Bhutanese are aiming to convert all government-owned vehicles and taxis to electric cars supplied by companies such as Nissan, Tesla, and Mahindra & Mahindra. Earlier this year, they cemented plans with Nissan to provide a few hundred Nissan Leafs to the Himalayan kingdom.

It’s a natural step for a country whose environmental policy has captured global attention. Bhutan's progressive environmental standards are so impressive, they're becoming discussion points at climate change and environmental events.

National Geographic celebrated Bhutan last month, a country it first featured in the magazine 100 years ago. Back then, British government officer and civil engineer John Claude White wrote about the country in the April 1914 edition of National Geographic, which the magazine said "lifted the veil on a mysterious land hidden in the world's highest mountains."

That mysterious land has become less exotic over the years; the Bhutanese royal family opened its doors to visitors in 1974 and introduced television to its people in 1999. The urban development that has followed has the Bhutanese government thinking more deeply about its environmental footprint.

Bhutan is a rarity though, in that environmental preservation is written into its constitution: 60% of its forests must be preserved, it states.

In 1977, the World Wildlife Fund worked with Bhutan’s forestry department to safeguard nature, guiding it on how to train forest rangers, set up checkpoints, build roads for patrolling, and mark off preserved lands from the rest of the terrain. Two decades later, the government put a ban on exporting timber altogether.

Collecting deadwood is a regulated process, requiring approval. Prime Minister Tobgay explained to the Asian Development Bank that the electricity produced from its hydropower stations - hydropower being the country's largest source of income outside of tourism - is minimizing the need for firewood and consequently keeping the country’s forests intact.

On the agricultural front, Bhutanese officials announced in 2012 that they would go completely organic. Given that many farmers can’t afford pesticides and chemical-based fertilizers, much of the agriculture could already be classified as organic and all-natural. The challenge, more so, is to build a regulatory framework in the country that could certify its farms as organic.

Of course, Bhutan hasn't forgotten about the little things too - it's working to enforce an outlaw on plastic bags.

Friday, December 26, 2014

INTERVIEW: Ken Greene of Hudson Valley Seed Library

For four years, Greene ran the HVSL out of that location, but in 2008 he and his business partner Doug Muller moved the HVSL onto a farm in Accord, New York where it has remained ever since.

Nowadays, the library catalog is online, the team has grown to include a dozen or so others in addition to Ken and Doug, and the library's membership boasts over 1,000 farmers and gardeners.

Shareable caught up with Greene to talk about his organization's roots, his passion for stories, and the future of seed libraries.

SHAREABLE: What inspired Hudson Valley Seed Library?

Ken Greene: I had become a seed saver in my own garden after learning about some of the global seed issues including loss of genetic diversity and consolidation of seed resources by the biotech industry. I wanted to make a small difference by taking responsibility for our local seeds and making sure they were preserved and protected.

But that didn't feel like I was doing enough, I wanted to find a way to share the seeds, and seed saving skills, with more people in my community. The more hands and gardens the seeds pass through, the more alive and protected they are for the future. I began to see seeds as having much in common with books- especially books in a library.

My deep appreciation for libraries and new-found passion for seeds were starting to become one. I began to see every seed was a story and felt the stories were meant to be shared. Growing a seed meant growing its story and keeping it alive. I saw that libraries keep stories alive by sharing them. So, adding seeds to the library catalog seemed logical, necessary, and important.

Just as our library was making out-of-print books available to the community, we could also make heirloom seeds, many under the threat of extinction, continually available. Just as we were keeping ideas, imagination, and stories alive by sharing them in print, we could keep the genetics and the cultural stories of seeds alive by sharing them.

Just as we trusted our patrons to bring back the books they checked out so that they could continue to be shared, I wondered if we could count on gardeners to save some seeds from the plants they grew to return to the library, keeping the seeds alive and creating regionally adapted varieties.

What was the community response?

Initially people were confused about seeing seeds available to check out in the library. Luckily, the library director, Peg Lotvin, and a local farm intern who was one of the founders of BASIL, a Bay Area seed exchange, were very enthusiastic about the idea.

Over time with meetings, workshops, and putting in a seed garden around the flag pole on the front lawn, the seed library became an appreciated part of the public library- and the first of its kind in the country. The year before I quit my job to farm seed full time we had about 60 active members in the seed library.

The next year, when my partner Doug and I put the library idea online, we had 500 members. Today we have a full seed catalog that anyone can buy homegrown, independent, organic seed from and we have an active seed saving community of over 1200 gardeners and farmers who participate in our "One Seed, Many Gardens" online seed library program.

A three pound heirloom tomato. Credit: HVSL Facebook

Other than seed preservation, what other roles does the HVSL play in the community?

I never would have imagined that our tiny seed library would grow into a full-fledged seed company and take over my life! I'm now on the Board of Directors for the Organic Seed Alliance, give lectures and teach workshops about seeds all over the country, and we are the largest producer of Northeast grown and adapted seeds in the country.

What draws you personally to seed preservation?

I love nurturing our plants through their full life-cycles and sharing the joy, magic, and abundance of seeds with others. More than preservation, I'm drawn to the idea that plants are always changing, just as we are. In order to keep these seeds alive and in the dirty hands of caring growers we need to allow them to change with us.

HVSL also does lovely art commissions for its seed packets. What's the story behind that?

I believe that artist are cultural seed savers and seed savers are agri-cultural artists. I came up with the idea of working with artists after collecting antique seed catalogs to research what varieties were being grown in our region 50-100 years ago. These old catalogs are full of art- no photographs.

Just as I want to keep the tradition of saving seeds by hand, I wanted to find a way to continue the beautiful and compelling tradition of illustrated catalogs- but in a more contemporary way. Our packs help remind us that seeds are not just a commodity to be bought and sold- they are living stories. The diversity of the artwork on our packs (each one is by a different artist) celebrates the diversity of the seeds themselves.

Seed packets from the seed library. Credit: HVSL Facebook.

A New York Times article about HSVL says you collect “cultural stories” as well as seeds. Can you speak a little more about that?

Every seed is a story. Actually, every seed is many stories. Genetic stories, human stories of travel, tragedy, an spirit. Some seed stories are tall tales, myths, or very personal stories from recent generations. We share many of these stories on our website as well as the stories of how we grow and care for the seeds in our catalog.

That article states that many of your members live in New York City. Why do you think it's important to offer your services to cities?

Many of the gardeners (and farmers!) who grow with our seeds do live in urban areas. There are more urban growers of all kinds- rooftops, containers, community gardens- than ever before. Finding the right varieties to grow in the many micro-climates that urban gardeners experience means searching out a diversity of seed sources.

Conventionally bred seeds are meant to be grown on large industrial and chemical based farms. The heirloom and open-pollinated seeds in our catalog have more flexibility, resiliency, and the most potential for adapting to urban growing environments.

What does the future look like for HVSL - and for seed libraries in general?

Over time the Hudson Valley Seed Library has become a mission-driven seed company. Our seed library model has changed to better focus on sharing high-quality seed. We based the new model on the popular “Community Reads” or “One Book, One Town” reading programs organized by book libraries all over the country where the whole town reads one book.

By encouraging every member in the Seed Library to grow the same variety in the same season, we’ll be able to teach everyone how to grow, eat, and save seeds from the varieties. We connect all of our gardens into one big seed farm--growing enough seeds of each year's Community Seed variety to share with friends, family, and our communities. Enough to last.

We've also begun training small-scale farmers on how to integrate seed saving into their food farm systems - the more local seeds the better!

On another note, your farm used to be a Ukrainian summer camp?!

The land we live on and grow on is shared by a community of friends. Originally a Catskill "poor-man's" resort with a hotel, boarding houses, kitchen building, ball room and more, the property was bought by a Ukrainian cultural camp in the late 60s.

Our group bought it from adults who had been campers here. We're fixing up what we can save, tearing down and salvaging the structures we can't save, building soil, growing seeds, and preserving the wildness of the surrounding woods.

The Seed Library farm. Credit: HVSL Facebook.

Do you have any advice for anyone who might want to start a seed library in their own community?

Yes! Mainly - there is no one thing that is a seed library. There are now over 300 seed libraries, seed swaps, seed exchanges, and community seed banks all over the country. Each one is different from the next.

I recommend starting with a simple seed swap to see who in your community is interested in gardening and seeds and then letting the group develop their own way of sharing seeds and their stories. I help communities develop community seed saving groups and there are many more resources out there than there were 10 years ago when I started the Hudson Valley Seed Library.

And lastly, what is your favorite or most interesting new plant?

Always the hardest question! I love all of the 400 varieties in our catalog for different reasons. We have cultivated and wild flowers, vegetables, culinary and medicinal herbs, and some oddities just for fun. For food I'm most excited about our Panther Edamame - a black open-pollinated nutty-flavored edamame that we grow in partnership with the Stone Barns Center.

For cultivated flowers I'm in love with Polar Bear Zinnia- a creamy white flower, for wild flowers I'm proud to now offer Milkweed to help stem the near extinction of Monarch Butterflies, and for herbs I love Garlic Chives which make the most amazing Kimchi.

You can connect more with the Hudson Valley Seed Library via their website or facebook.

##

Protect seed sharing in the United States by signing Shareable's petition here. State governments around the country are regulating seed libraries out of existence. Please help us stop them!

Monday, December 15, 2014

Lima Climate Agreement: Experts React

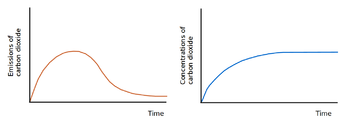

|

| Stabilizing the atmospheric concentration of CO 2 at a constant level would require emissions to be effectively eliminated (Wikipedia) |

After running long past its scheduled finish time, the United Nations Lima climate talks finally delivered an agreement on Sunday that should see all countries, not just developed ones, pledge to cut their emissions after 2020.

But the deal still leaves much uncertainty and could reduce the amount of scrutiny that countries' climate plans will receive as the negotiations inch their way towards a possible deal at the crucial talks in Paris next December.

Here, our experts give their verdicts on the summit and its outcome.

Peter Burdon, Senior Lecturer, University of Adelaide

The Lima climate deal has two critical consequences for developing countries.

First, clause 4 urges developed countries to “provide and mobilize” financial support to help developing countries deal with the effects of climate change. To date, only US$10 billion (A$12 billion) has been allocated to the Green Climate Fund (10% of the annual target).

This is clearly insufficient, and as Secretary of State John Kerry announced to delegates: “When Typhoon Haiyan hit the Philippines last year, the cost of responding to the damage exceeded US$10 billion.”

Second, developing countries succeeded in reintroducing clause 11, which recognises their “special circumstances” in setting emissions-reduction targets. Australia (alongside the United States) fought against special status, arguing that: “It doesn’t matter where the emissions come from, they are global emissions."

This was the deepest fault line to emerge in Lima and we should expect it to erupt as pressure builds to reach a binding deal in Paris next year.

Nigel Martin, Lecturer, College of Business and Economics, Australian National University

While it was nice to see the Global Climate Fund (GCF) reach its US$10 billion capitalization pledge during COP20, there is still a long way to go in terms of reaching the US$100 billion funding level by 2020.

As a general principle, the A$200 million pledged by Australia is a good start, but if we look at our contribution to global emissions at about 1.3%, we only offered to fund around 0.2% of what is needed by 2020.

So in relative terms, while we probably need to do more, is it possible? Looking at the patchy Australian economy, and the International Monetary Fund’s global growth predictions of around 3.8% over 2015, the fiscal situation looks tight going forward.

Importantly, we think the GCF is probably going to need more money from private sector organizations in order to prosecute the mitigation and adaptation projects the UN has in mind.

It will be interesting to see whether private organizations in Australia, especially some of the big ones like BHP Billiton and Rio Tinto, commit to any funding. My guess is the Australian government will need to provide some tax incentives or look at issuing some type of ‘green’ bonds to get the private sector more engaged.

Ian McGregor, Lecturer in Management, UTS Business School

With 196 sovereign states involved and global energy systems a key part of global economic systems, the major problem is overcoming the resistance to major change.

The traditional United Nations negotiating system is not really the way to address this critical problem as we need a system that build a clear global shared vision of an ecologically sustainable economic and social system.

Poverty is one of the other major global issues, and developing countries are not that willing to move away from fossil fuels as that is how developed countries got wealthy, as well as some that are still classified as developing. For example, Saudi Arabia and Qatar have higher per capita gross domestic products and per capita emissions than most developed countries.

However, I do not know how we could move to a more effective system given how entrenched this treaty/protocol negotiating process is entrenched in the UN systems.

This article was originally published on The Conversation. Read the original article.

Labels:

Climate Change,

Political Change,

Pollution,

Social Change

Saturday, December 13, 2014

'Future Earth' Platform Outlines Global Change Strategy

|

| Photo Credit: AP |

by Mark Kinver

Environment reporter, BBC News: http://www.bbc.com/news/science-environment-30277519

A global initiative bringing together scientists across different disciplines has launched its strategy to identify key priorities for sustainability.

The document outlines what Future Earth, launched at the 2012 Rio +20 Summit, hopes to contribute towards the UN Sustainable Development Goals.

It has identified eight global challenges, including "water, energy and food for all" and decarbonisation.

The strategy also focuses on the roles of policymakers and funding bodies.

"Future Earth is a global research platform aimed at connecting the world's scientists across the regions and across disciplines to work on the problems of sustainable development and the solutions to move us towards sustainable development," explained Future Earth science committee vice-chairwoman Belinda Reyers.

"It really is an unprecedented attempt to consult with scientists across the world as well as with important stakeholders and policymakers," she told BBC News. "It will consider what kind of science is needed in the medium-term to really move us towards more desirable futures."

Dr Reyers - chief scientist at the Council for Scientific and Industrial Research (CSIR) in Stellenbosch, South Africa - said the strategy had been distilled down to eight "sustainability challenges":

- Deliver water, energy and food for all

- Decarbonise socio-economic systems

- Safeguard the terrestrial, freshwater and marine natural assets

- Building healthy, resilient and productive cities

- Promote sustainable rural futures

- Improve human health

- Encourage sustainable consumption and production patterns

- Increase social resilience to future threats

Dr Reyers explained the strategy aimed to build on the global Sustainable Development Goals (SDGs) process within the United Nations framework.

The SDGs are the successor to the UN Millennium Development Goals, which come to end in 2015.

The concept of sustainable development - defined as "development that meets the needs of the present, without compromising the ability of future generations to meet their own needs" - has been on the international policy scene since the late 1980s and the publication of the Our Common Report report by the Brundtland Commission.

However, Dr Reyers said that the time was now right for a global science platform to work alongside the established policy framework.

"Future Earth builds on the legacy of the past 20 years of investment in sustainability science, but that investment was very fragmented".

"We are also much more aware of the future, thinking about challenges such as nine to eleven billion people on the planet in the coming decades and how we can feed them in a warmer and less predictable world".

"If you look at news headlines, the scale and complexity of sustainability challenges that we are facing are very evident, but also very different from those outlined in the Brundtland Commission documents. Now we have things like the Ebola outbreak in West Africa and the widespread droughts in California.

"Using sustainability challenges, societal needs and policy priorities to direct our science makes it both more relevant and accessible," she observed.

Thursday, December 11, 2014

How Solar Power Could Slay the Fossil Fuel Empire by 2030

|

| A 1906 gasoline engine (Photo: Wikipedia) |

In just 15 years, the world as we know it will have transformed forever. The age of oil, gas, coal and nuclear will be over.

A new age of clean power and smarter cars will fundamentally, totally, and permanently disrupt the existing fossil fuel-dependent industrial infrastructure in a way that even the most starry-eyed proponents of ‘green energy’ could never have imagined.

These are not the airy-fairy hopes of a tree-hugging hippy living off the land in an eco-commune.

It’s the startling verdict of Tony Seba, a lecturer in business entrepreneurship, disruption and clean energy at Stanford University and a serial Silicon Valley entrepreneur.

Seba began his career at Cisco Systems in 1993, where he predicted the internet-fueled mobile revolution at a time when most telecoms experts were warning of the impossibility of building an Internet the size of the US, let alone the world. Now he is predicting the “inevitable” disruption of the fossil fuel infrastructure.

Seba’s thesis, set out in more detail in his new book Clean Disruption of Energy and Transportation, is that by 2030 “the industrial age of energy and transportation will be over,” swept away by “exponentially improving technologies such as solar, electric vehicles, and self-driving cars.”

Google's autonomous car. Image: Steve Jurvetson, Wikimedia

Tremors of change

Seba’s forecasts are being taken seriously by some of the world’s most powerful finance, energy, and technology institutions.

Last November, Seba was a keynote speaker at JP Morgan’s Annual Global Technology, Media, and Telecom Conference in Asia, held in Hong Kong, where he delivered a stunning presentation on what he calls the “clean disruption.”

Seba’s JP Morgan talk focused on the inevitable disruption in the internal combustion engine. By his forecast, between 2017 and 2018, a mass migration from gasoline or diesel cars will begin, rapidly picking up steam and culminating in a market entirely dominated by electric vehicles (EV) by 2030.

Not only will our cars be electric, Seba predicts, but rapid developments in self-driving technologies will mean that future EVs will also be autonomous. The game-change is happening because of revolutionary cost-reductions in information technology, and because EVs are 90 percent cheaper to fuel and maintain than gasoline cars.

The main obstacle to the mass-market availability of EVs is the battery cost, which is around $500 per kilowatt hour (kWh). But this is pitched to fall dramatically in the next decade. By 2017, it could reach $350 kWh - which is the battery price-point where an electric car becomes cost-competitive with its gasoline equivalent.

Seba estimates that by 2020, battery costs will fall to $200 kWh, and by 2024-25 to $100 kWh. At this point, the efficiency of a gasoline car would be irrelevant, as EVs would simply be far cheaper. By 2030, he predicts, “gasoline cars will be the 21st century equivalent of horse carriages.”

It took only 13 years for societies to transition from complete reliance on horse-drawn carriages to roads teeming with primitive automobiles, Seba told his audience.

Lest one imagine Seba is dreaming, in its new quarterly report, the leading global investment firm Baron Funds concurs: “We believe that BMW will likely phase out internal combustion engines within 10 years” (investors at rival bank Morgan Stanley are making a similar bet, and are financing Tesla).

Two days after his JP Morgan lecture, Seba was addressing the 2014 Global Leaders’ Forum in south Korea, sponsored by Korean government ministries for science and technology, where he elaborated on the prospects of an energy revolution. Within just 15 years, he said, solar and wind power will provide 100 percent of energy in competitive markets, with no need for government subsidies.

Over the last year Seba has even been invited to share his vision with oil and gas executives in the US and Europe. “Essentially, I’m telling them you’re out of business in less than 15 years,” Seba said.

Revolutionary economics of renewables

For Seba, there is a simple reason that the economics of solar and wind are superior to the extractive industries. Extraction economics is about decreasing returns. As reserves deplete and production shifts to more expensive unconventional sources, costs of extraction rise.

Oil prices may have dropped dramatically due to the OPEC supply glut, but costs of production remain high. Since 2000, the oil industry's investments have risen threefold by 180 percent, translating into a global oil supply increase of just 14 percent.

In contrast, the clean disruption is about increasing returns and decreasing costs. Seba, who dismisses biomass, biofuels and hydro-electric as uneconomical, points out that with every doubling of solar infrastructure, the production costs of solar photovoltaic (PV) panels fall by 22 percent.

“The higher the demand for solar PV, the lower the cost of solar for everyone, everywhere,” said Seba. “All this enables more growth in the solar marketplace, which, because of the solar learning curve, further pushes down costs.”

Globally installed capacity of solar PV has grown from 1.4 GW in the year 2000 to 141 GW at the end of 2013: a compound annual growth rate of 43 percent. In the United States, new solar capacity has grown from 435 megawatts (MW) in 2009 to 4,751 MW in just four years: an even higher rate of 82 percent.

Meanwhile, solar panel costs are now 154 times cheaper than they were in 1970, dropping from $100 per watt to 65 cents per watt.

What we are seeing are exponential improvements in the efficiency of solar, the cost of solar, and the installation of solar. “Put these numbers together and you find that solar has improved its cost basis by 5,355 times relative to oil since 1970,” Seba said. “Traditional sources of energy can’t compete with this.”

Solar plant. Image: BLM

A great delusion?

Other experts disagree. Renowned scientist Vaclav Smil of the University of Manitoba has studied the history of energy transitions, and argues that forecasts of an imminent renewable energy revolution are deluded.

It took between 50 and 75 years for fossil fuels to contribute significantly to national energy requirements, in circumstances where technology was cheap and supplying baseload power (operating 24 hours continuously) was not a problem. So the idea that renewables could be scaled up in decades is fantasy, he argues.

Similarly, Australian sustainability expert Prof Ted Trainer of the University of New South Wales and the Simplicity Institute argues that renewables cannot cope with demand in industrial consumer societies.

“The raw cost of PV is not crucial,” Prof Trainer told me. “Even if it was free it cannot provide any energy at all for about 17 hours on an average day, and in Europe there can be three weeks in a row with virtually no PV input.”

Trainer also flagged-up ‘energy return on investment’ (EROI) - the quantity of energy one can get out compared to how much one puts in: “EROI for PV is around 3:1. It hardly matters what it costs if it’s down there.”

Fossil fuel ostriches

“What the skeptics don’t understand is, when disruption happens, it happens swiftly, within two decades or even two years,” Seba told me. “Just ask anyone at your favorite camera film, telegraph, or typewriter company.”

Kodak, a photography giant in 2003, filed for bankruptcy in 2012, as the digital photography revolutions swept away dependence on film. We’ve seen parallel disruptions with smartphones and tablets.

Seba’s main answer to Smil is to highlight the folly of extrapolating the potential for future energy transitions from the past. New clean energy industries are utterly different from old fossil fuel ones. It’s as if saying the industrial revolution could never have happened based on studying the feudal dynamics of pre-industrial societies.

Costs of solar are not just decreasing exponentially, they will continue to do so due to increasing innovation, scale, and competition. “There are 300,000 solar installations in the US right now. By 2022, there will be 20 million solar installations in the US,” Seba predicts.

As a rule, Seba said, when a technology product achieves critical mass (historically defined as about 15-20 percent of the market), its market growth accelerates further, and sometimes exponentially, due to the positive feedback effects.

In hundreds of markets around the world, unsubsidized solar is already cheaper than subsidized fossil fuels and nuclear power. A new Deutsche Bank report just made headlines at the end of October for predicting that solar electricity in the US is on track to be as cheap or cheaper than fossil fuels as early as 2016.

Seba also dismisses concerns about baseload, pointing me to the new Solar Reserve 110 MW baseload solar plant in the Nevada desert, running on molten salt storage, that will power Las Vegas at night.

Meanwhile, increasing efficiencies and plummeting costs of lithium ion (li-on) batteries are already making night-time residential storage of PV and wind power cost-effective. Every year, li-ion battery costs drop by 14-16 percent.

By 2020, experts believe that li-on will cost around $200-250 per kilowatt per hour (kWh) in which case, according to Seba: “A user could, for about $15.30 per month, have eight hours of storage to shift solar generation from day to evening, not pay for peak prices, and participate in demand-response programs.”

Power plant. Image: Pixabay, CC

Judgement Day

At the current rate of growth, Seba’s projections show, globally installed solar capacity will reach 56.7 terrawatts (TW) in the next 15 years: equivalent to 18.9 TW of conventional baseload power. That would be enough to power the world, and then some - projected world energy demand at that time would be 16.9 TW.

Paul Gilding, who has spent the last 20 years advising global corporations like Ford, DuPont, BHP Billiton, among many others on sustainable business strategy, agrees that the trends Seba highlights imply “a disruptive transformational system change” that outpaces the “assumptions built on the old world view of centralised generation.”

Author of The Great Disruption, Gilding said that “it’s the systemic interactions of software, new players, disruptive business models and technology that accelerates the shift,” and which “will be self reinforcing” - not just cheap prices.

EROI concerns are therefore a red-herring. Seba argues that the minimal costs of maintaining solar panels which last many decades, coupled with the free energy generation once initial costs are repaid, mean that real EROI for solar is dramatically higher than fossil fuels in the long-run.

“Should solar continue on its exponential trajectory, the energy infrastructure will be 100-percent solar by 2030,” Seba said. “The only reason for this not to happen is that governments will protect or subsidize conventional coal, nuclear, oil, gas generating stations - even when this means higher prices for consumers.”

While solar has already reached ‘grid parity’, becoming as cheap or cheaper than utility rates in many markets, within five years Seba anticipates the arrival of what he calls ‘God Parity’: when onsite rooftop solar generation is cheaper than transmission costs.

Then, even if fossil fuel plants generated at zero costs (an impossibility), they could never compete with onsite solar. So after 2020, the conventional energy industry will start going bankrupt.

The costs of wind, which complements solar at night and in winter, is also plummeting and will beat every other energy source, except solar, in the same time-frame, according to his analysis.